Edge AI in Robotics: Smarter, Faster, More Efficient

Robots aren’t what they used to be. Not long ago, every command, every decision, had to travel through cloud servers. That meant delays, dependence on stable internet, and serious limitations when robots needed to think on their feet. Edge AI is changing that entirely.

Here’s the thing: Edge AI shifts the brainpower to the device itself. Robots don’t need to wait for answers from distant servers. They think and act on their own, instantly. Whether it’s a robotic arm on a factory floor or an agricultural drone scanning crops, Edge AI is making them smarter, faster, and more reliable.

Let’s break it down and see how this shift is playing out.

What Is Edge AI and Why It Matters

Edge AI refers to artificial intelligence that runs locally on hardware devices, instead of relying on cloud-based servers. The processing happens on-site. No internet lag. No round-trip communication.

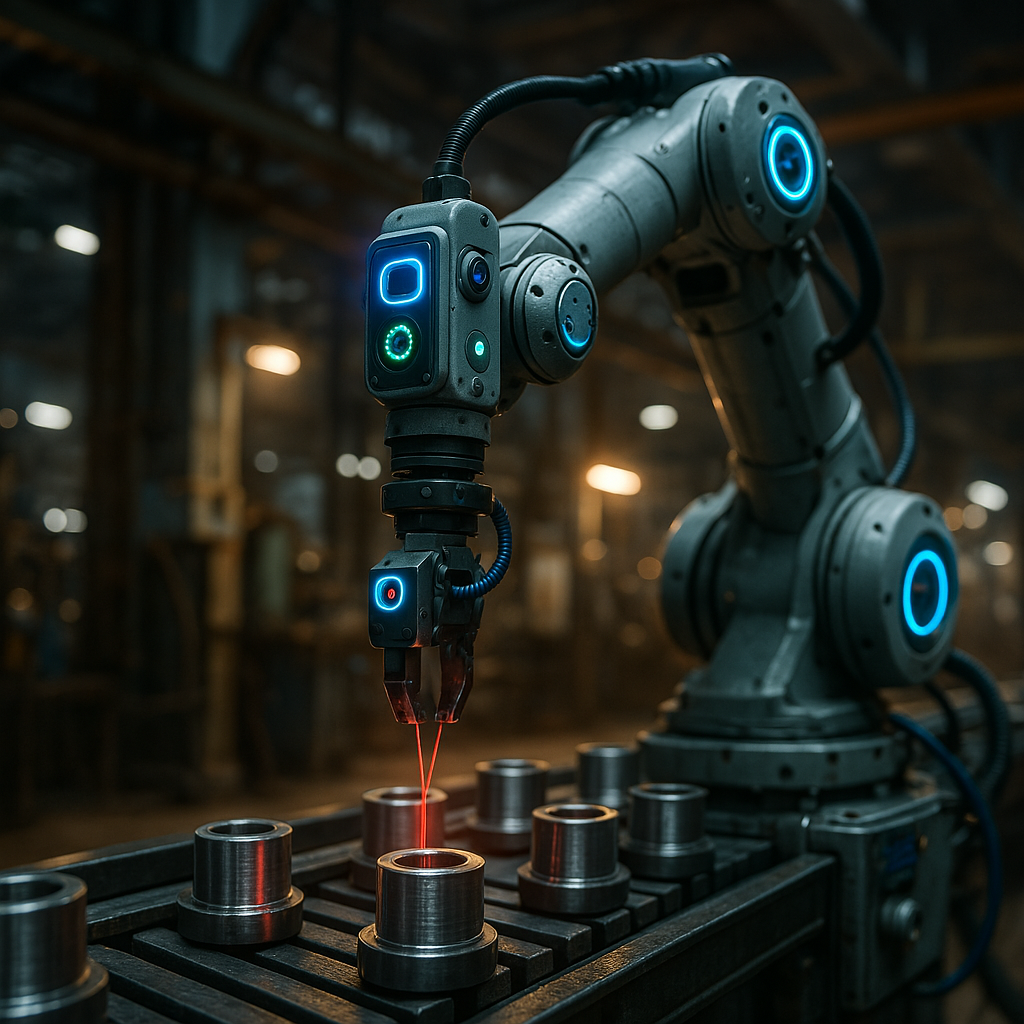

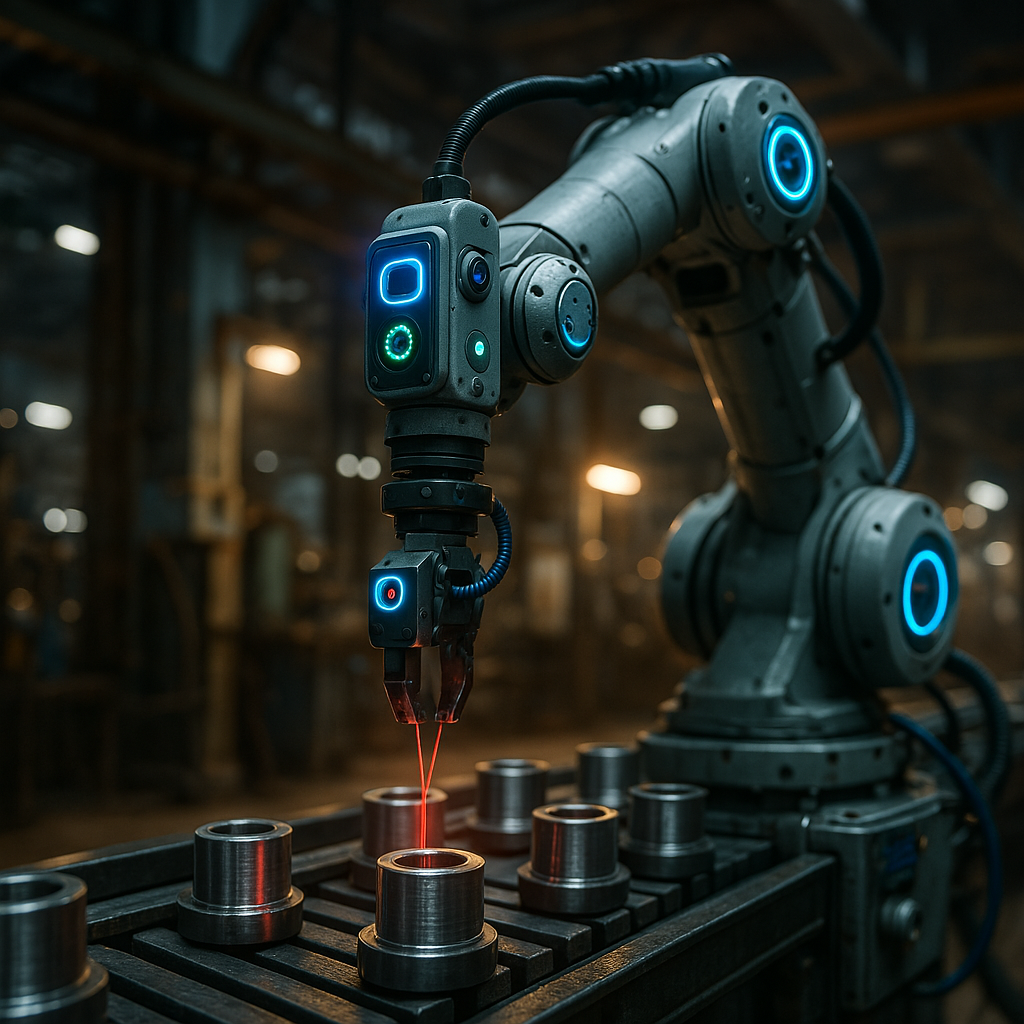

For robotics, this means real-time action. A manufacturing robot can detect and react to a defective part instantly. A warehouse bot can avoid a collision the moment a human crosses its path. The difference is not just speed, but autonomy.

This kind of independence is a big deal. Robots no longer pause to think. They just act.

Cloud Robotics vs Edge Robotics

To see why this matters, compare the two setups:

Cloud Robotics relies on a constant connection to remote servers. Every image, signal, or sensor input must travel to the cloud, get processed, and then come back with a command. If your network hiccups, so does your robot.

Edge Robotics keeps everything local. The camera, sensors, AI model—all packed into the device. This means zero reliance on bandwidth, better response times, and far fewer vulnerabilities.

It’s not just a technical improvement. It’s a shift in how we build and trust autonomous systems.

Real-World Applications Already in Motion

Manufacturing: Smart arms and conveyors now detect anomalies without cloud input. They flag defects, adjust grip strength, or stop operations—all within milliseconds.

Agriculture: Drones fitted with edge AI models scan crops and soil patterns mid-flight. They deliver insights before landing. Ground bots spot pests or nutrient gaps and alert farmers instantly.

Logistics: In a warehouse, timing is everything. Edge-powered bots reroute in real time when aisles get blocked or crowded. No downtime, no drama.

These aren’t future plans. This is happening now.

READ MORE

Why the Shift Makes Sense

Here’s what Edge AI brings to the table:

- Speed: No more waiting. Everything runs locally, which cuts out delays.

- Stability: With no need for constant internet, robots stay sharp even in remote areas.

- Security: Data stays on the device. That limits exposure and makes breaches less likely.

- Scalability: Add more robots without overloading your cloud infrastructure.

- Energy Efficiency: Less data transmission means lower power use, especially for mobile bots.

This isn’t just better performance. It’s smarter resource use across the board.

The Technical Hurdles That Still Exist

Nothing is perfect. Edge AI comes with trade-offs:

- Processing Power: Devices have limits. You can’t run massive models on a tiny microchip.

- Model Compression: Making models small enough to run on-device often means losing detail.

- Battery Constraints: Mobile robots balancing AI workloads and battery life have tough choices to make.

- Hardware Cost: Not every company can afford AI-ready edge devices right out of the gate.

These are solvable problems, but they slow down adoption in certain sectors.

How Edge AI Changes the Robotics Conversation

Before edge computing, we had to design robots around their connection to the cloud. With edge AI, we can start designing robots that make decisions like humans do—on the fly, based on local information.

It’s not just about making faster robots. It’s about building ones that are more aware, more responsive, and more reliable.

For industries that rely on uptime and precision, that shift matters. A self-correcting robot arm. A drone that adjusts its flight path mid-air. A delivery bot that reroutes without being told. These changes open up entirely new possibilities.

What’s Coming Next?

The hardware is getting better. AI models are shrinking without losing smarts. Power systems are catching up. And that means edge robotics will scale faster in the next few years.

Expect smarter swarm bots. More self-repairing machines. Tighter integration with computer vision, predictive maintenance, and IoT networks.

What this really means is simple: robots are becoming less dependent, and more intelligent.

FAQ

Q1: Is edge AI better than cloud AI?

Each has its place. Edge AI is better for real-time decisions and privacy. Cloud AI is still useful for large-scale data analysis.

Q2: What devices can run edge AI?

From Raspberry Pi boards to NVIDIA Jetson modules, plenty of compact devices are edge-AI capable. It depends on the model size and task complexity.

Q3: Is it expensive to implement edge AI in robotics?

Initial costs can be high due to hardware needs, but the long-term savings from efficiency and lower cloud costs often balance it out.